In an era where large-scale foundation models are becoming increasingly embedded in safety-critical applications, the need for transparency and trust between humans and autonomous systems has never been more pressing. This is particularly true in domains such as transportation, medical care, and defence, where human-autonomy teaming (HAT) is becoming a cornerstone of modern operations. A recent study, conducted by a team of researchers including Xiangqi Kong, Yang Xing, Antonios Tsourdos, Ziyue Wang, Weisi Guo, Adolfo Perrusquia, and Andreas Wikander, delves into the under-explored realm of Explainable Interfaces (EI) within HAT systems, offering a human-centric perspective that enriches the existing body of research in Explainable Artificial Intelligence (XAI).

The study begins by clarifying the distinctions between key concepts: Explainable Interface (EI), explanations, and model explainability. This clarification is crucial for providing researchers and practitioners with a structured understanding of how these elements interact within HAT systems. By doing so, the researchers aim to bridge the gap between the technical intricacies of autonomous systems and the human factors that are essential for effective collaboration.

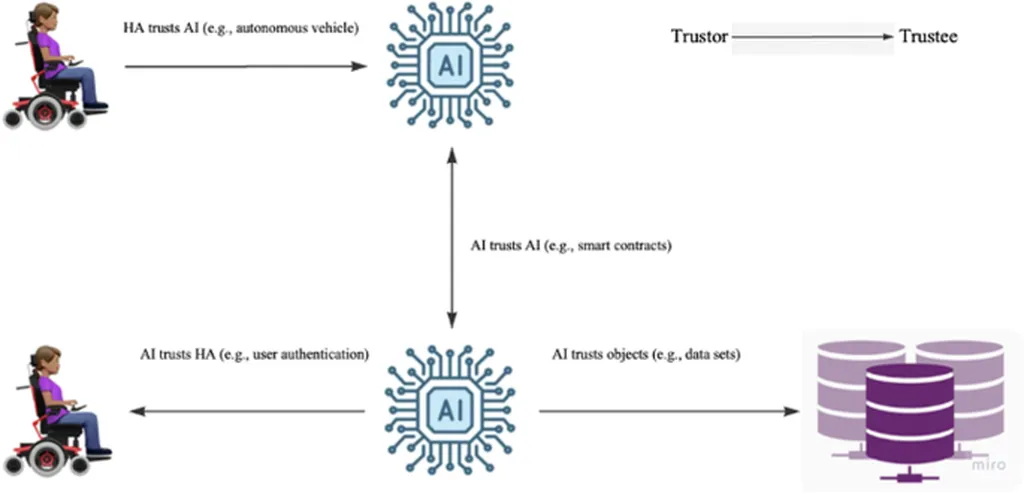

One of the most significant contributions of this research is the introduction of a novel framework for EI, specifically designed to address the unique challenges posed by HAT. This framework is built upon extensive surveys across various disciplines, including XAI, HAT, psychology, and Human-Computer Interaction (HCI). By synthesizing insights from these fields, the researchers offer a comprehensive approach to integrating XAI into HAT systems, thereby enhancing the mutual understanding and trust between humans and autonomous entities.

The evaluation framework proposed in the study offers a holistic perspective, encompassing model performance, human-centered factors, and group task objectives. This multi-faceted approach ensures that the effectiveness of EI is assessed not only in terms of technical accuracy but also in terms of its impact on human users and the overall success of collaborative tasks. This is particularly important in safety-critical applications, where the stakes are high, and the margin for error is minimal.

The study also outlines future directions for research and development in the field of EI within HAT systems. By identifying key areas for further exploration, the researchers provide a roadmap for advancing the state-of-the-art in XAI and HAT, ultimately contributing to the development of more transparent, trustworthy, and effective autonomous systems.

In conclusion, this research represents a significant step forward in the quest to make autonomous systems more understandable and trustworthy to human users. By focusing on the design, development, and evaluation of Explainable Interfaces, the study offers valuable insights and practical guidance for researchers and practitioners in the field of XAI. As autonomous systems continue to play an increasingly important role in safety-critical applications, the findings of this study will be instrumental in shaping the future of human-autonomy teaming. Read the original research paper here.