In the rapidly evolving landscape of military technology, machine learning (ML) models are poised to revolutionize battlefield operations. However, their deployment is fraught with vulnerabilities, particularly to adversarial attacks that can compromise sensitive data and undermine strategic advantages. A recent study by researchers Tyler Shumaker, Jessica Carpenter, David Saranchak, and Nathaniel D. Bastian introduces a groundbreaking pipeline designed to assess and mitigate the risks associated with model inversion attacks (MIAs), a critical concern in the defence sector.

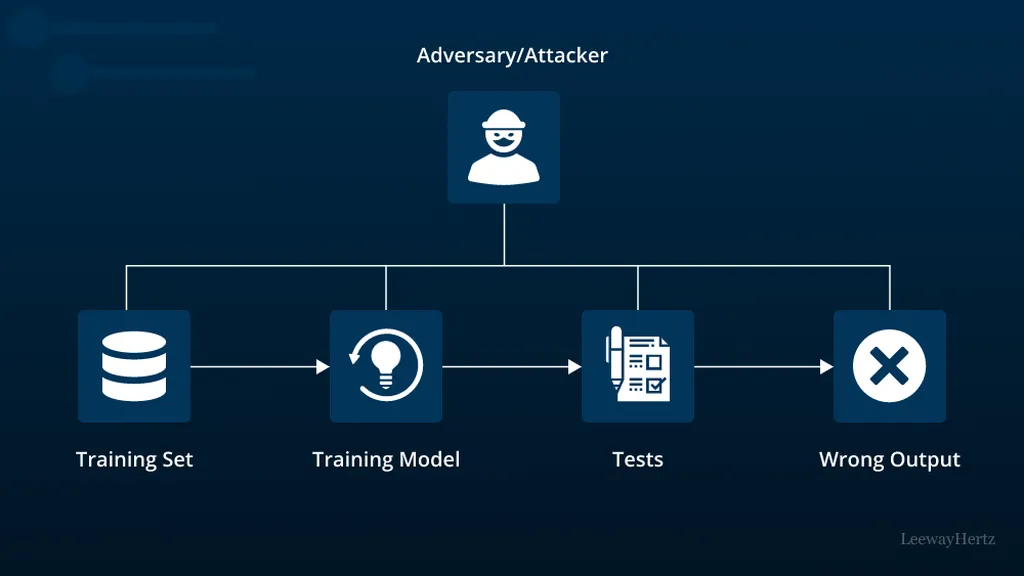

The study highlights the urgent need for robust developmental test and evaluation (DT&E) tools to quantify the privacy risks posed by MIAs. These attacks enable adversaries to reverse-engineer sensitive information from ML models, potentially exposing confidential training data. The current DT&E process is hampered by the subjective and often incomprehensible nature of ML model inversions, making it difficult to assess inversion quality and scale the evaluation across diverse model architectures and data modalities.

To address these challenges, the researchers developed an automated, scalable DT&E pipeline that quantifies the risk of data privacy loss from MIAs. The pipeline introduces four adversarial risk dimensions to measure privacy loss effectively. By integrating vision language models (VLMs) with inversion techniques, the pipeline enhances the accuracy and scalability of privacy loss analysis. The researchers demonstrated the effectiveness of their pipeline using multiple MIA techniques and VLMs configured for zero-shot classification and image captioning.

The study benchmarks the pipeline against state-of-the-art MIAs in the computer vision domain, focusing on an image classification task commonly used in military applications. The results underscore the pipeline’s ability to improve the effectiveness and scalability of privacy loss analysis, providing a critical tool for defence and security sectors.

The implications of this research are profound. As military forces increasingly rely on ML models for operational advantages, the ability to assess and mitigate privacy risks becomes paramount. The automated DT&E pipeline offers a scalable solution that can be adapted to various ML architectures and data types, ensuring robust protection against adversarial attacks. By enhancing the interpretability and quantifiability of inversion quality, the pipeline enables defence practitioners to make informed decisions and maintain a strategic edge in an increasingly complex threat landscape.

In summary, the innovative pipeline developed by Shumaker, Carpenter, Saranchak, and Bastian represents a significant advancement in the field of ML security. By addressing the critical challenges of privacy risk assessment and scalability, this research paves the way for more secure and effective deployment of ML models in military applications. As the defence sector continues to evolve, such advancements will be crucial in safeguarding sensitive information and maintaining operational integrity. Read the original research paper here.