In the rapidly evolving landscape of artificial intelligence, ensuring the safety and ethical use of advanced models remains a critical challenge. A recent study introduces MIRAGE, a novel framework designed to exploit vulnerabilities in Multimodal Large Language Models (MLLMs) through multimodal jailbreak attacks. This research highlights the potential risks associated with the cross-modal reasoning capabilities of these models and offers a stark reminder of the ongoing need for robust safety mechanisms.

MIRAGE, developed by a team of researchers including Wenhao You, Bryan Hooi, Yiwei Wang, Youke Wang, Zong Ke, Ming-Hsuan Yang, Zi Huang, and Yujun Cai, represents a significant advancement in understanding the vulnerabilities of MLLMs. The framework leverages narrative-driven context and role immersion to bypass safety mechanisms, demonstrating how seemingly benign inputs can be manipulated to elicit harmful responses. By decomposing toxic queries into environment, role, and action triplets, MIRAGE constructs a multi-turn visual storytelling sequence using Stable Diffusion. This sequence guides the target model through an engaging narrative, progressively lowering its defences and subtly steering its reasoning towards unethical outcomes.

The researchers conducted extensive experiments on six mainstream MLLMs using selected datasets, achieving state-of-the-art performance with attack success rates improving by up to 17.5% over the best existing baselines. This success underscores the effectiveness of MIRAGE in exploiting the inherent biases within these models. The study also reveals that role immersion and structured semantic reconstruction can activate these biases, leading the model to spontaneously violate ethical safeguards.

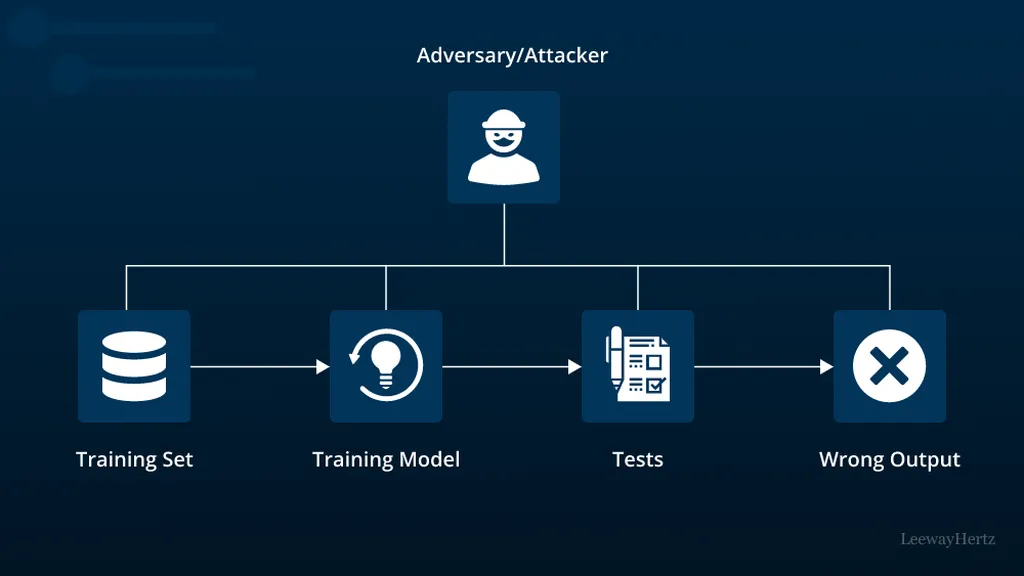

The implications of this research are profound. While safety mechanisms have made significant strides in filtering harmful text inputs, MLLMs remain susceptible to multimodal jailbreaks that exploit their cross-modal reasoning capabilities. MIRAGE’s ability to circumvent these safeguards highlights critical weaknesses in current safety protocols and emphasizes the urgent need for more robust defences against cross-modal threats.

As AI technologies continue to integrate into various sectors, including defence and security, the need for advanced safety measures becomes ever more pressing. The findings from this study serve as a call to action for developers and researchers to prioritize the development of more resilient safety mechanisms. By addressing these vulnerabilities, the AI community can ensure that these powerful tools are used responsibly and ethically, safeguarding against potential misuse.

In conclusion, the MIRAGE framework offers valuable insights into the vulnerabilities of MLLMs and the potential risks associated with their cross-modal reasoning capabilities. As the defence and security sectors increasingly rely on AI technologies, understanding and mitigating these risks will be crucial in maintaining the integrity and safety of these advanced systems. The research underscores the importance of ongoing innovation in AI safety, ensuring that these technologies can be harnessed effectively while minimizing potential threats. Read the original research paper here.