In the rapidly evolving landscape of artificial intelligence (AI), the ethical implications of deploying generative AI technologies have become a focal point of debate, particularly in sectors where life-altering decisions are made under pressure. A recent study by David Oniani, Jordan Hilsman, Yifan Peng, COL Ronald K. Poropatich, COL Jeremy C. Pamplin, LTC Gary L. Legault, and Yanshan Wang explores the parallels between military and healthcare environments, highlighting the urgent need for a robust ethical framework to guide the use of generative AI in healthcare.

The U.S. Department of Defense established ethical principles for AI use in military operations in 2020, recognizing the critical need for guidelines in high-stakes decision-making scenarios. Similarly, healthcare professionals face life-and-death situations daily, where rapid, informed decisions are paramount. The study underscores the potential of generative AI to revolutionize healthcare by leveraging vast amounts of health data, including electronic health records, electrocardiograms, and medical images. However, the integration of this technology into healthcare raises significant ethical concerns, particularly around transparency, bias, and the exacerbation of health disparities.

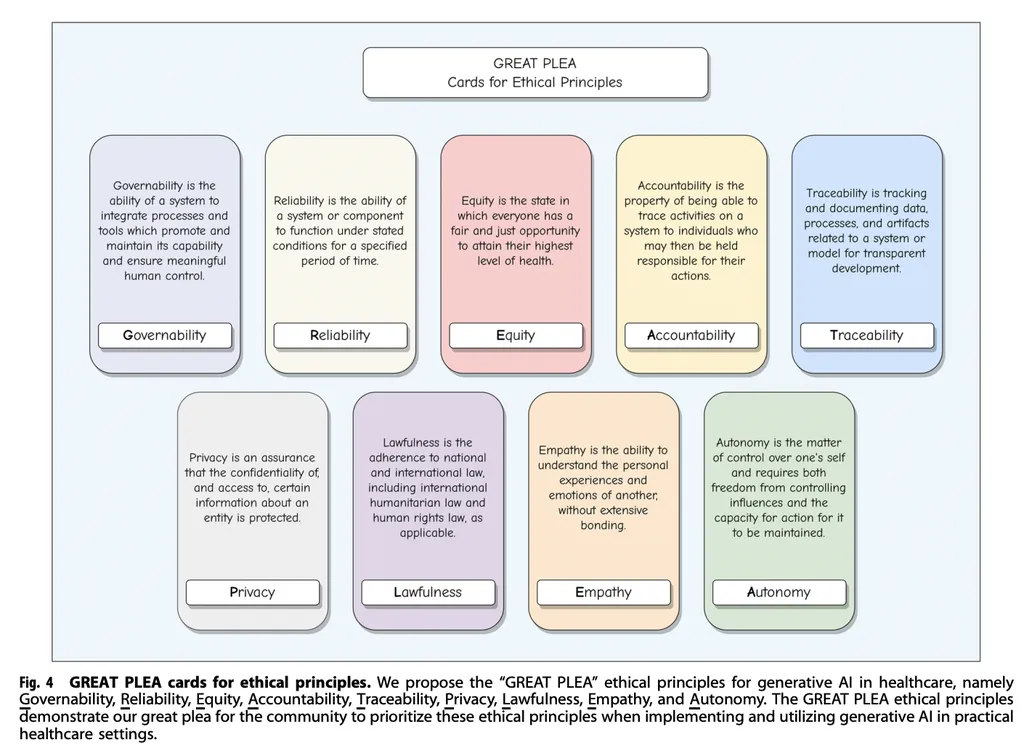

The researchers propose a comprehensive set of ethical principles known as GREAT PLEA—governance, reliability, equity, accountability, traceability, privacy, lawfulness, empathy, and autonomy—to address these challenges. Governance ensures that AI systems are managed and regulated effectively, while reliability focuses on the accuracy and consistency of AI outputs. Equity aims to mitigate biases that could lead to unequal treatment, and accountability ensures that responsible parties are held answerable for AI-driven decisions. Traceability involves maintaining a clear record of AI processes, and privacy safeguards sensitive patient data. Lawfulness ensures compliance with legal standards, empathy promotes the consideration of human values, and autonomy respects patient decision-making rights.

The GREAT PLEA framework is designed to proactively tackle the ethical dilemmas and challenges posed by the integration of generative AI in healthcare. By adopting these principles, healthcare providers and policymakers can navigate the complexities of AI deployment, ensuring that the technology enhances rather than compromises patient care. The framework also encourages a proactive approach to ethical considerations, fostering a culture of responsibility and transparency in AI development and application.

The study highlights the importance of interdisciplinary collaboration in developing ethical guidelines for AI. By drawing on the experiences and insights of both military and healthcare professionals, the researchers provide a holistic approach to addressing the ethical challenges of generative AI. This collaborative effort underscores the need for ongoing dialogue and adaptation as AI technologies continue to evolve.

In conclusion, the GREAT PLEA ethical principles offer a proactive and comprehensive approach to integrating generative AI into healthcare. By addressing key ethical concerns, the framework aims to ensure that AI technologies are deployed responsibly, enhancing patient care and mitigating potential risks. As healthcare systems worldwide grapple with the implications of AI, the insights from this study provide a valuable roadmap for ethical and effective AI integration. Read the original research paper here.