In the rapidly evolving field of machine learning, researchers are continually pushing the boundaries of what’s possible in computer vision tasks, particularly in the realm of image classification. A recent study by Arkadiusz Czuba introduces a novel approach to classifying micro-Doppler spectrograms, which are crucial for distinguishing between unmanned aerial vehicles (UAVs) and helicopters in surveillance radar systems. This research leverages the Vision Transformer (ViT) architecture, offering a significant advancement over traditional convolutional neural networks (CNNs).

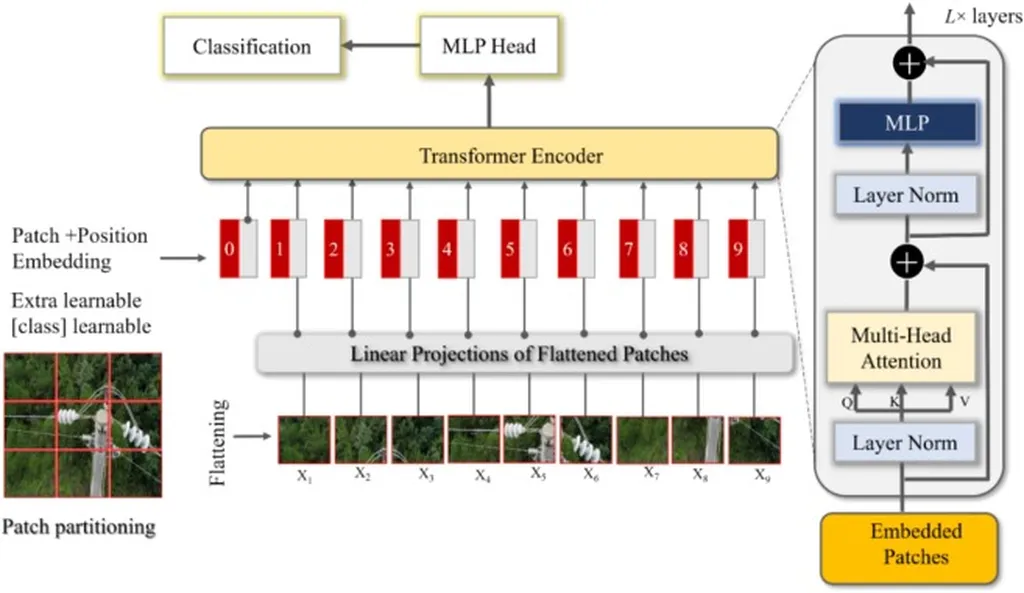

The study addresses a key limitation of CNNs, which struggle with varying image resolutions or require resizing, a problem that becomes particularly challenging when dealing with micro-Doppler spectrograms generated with different integration times. Czuba’s work demonstrates how the ViT architecture can effectively classify these spectrograms without the need for resizing, thereby preserving critical details and enhancing accuracy.

A critical aspect of this research is the preprocessing of the spectrograms. Before classification, the signal-to-noise ratio and visibility of micro-Doppler features were improved using a denoising algorithm based on a modified Dual Tree Complex Wavelet Transform. This preprocessing step is vital for ensuring that the input data is of the highest quality, thereby maximizing the effectiveness of the ViT model.

The experiments were conducted using real data collected from a short-range, military surveillance radar. The results were impressive, with the ViT model achieving an accuracy of 97.76% in classifying UAVs and helicopters. This high level of accuracy underscores the potential of the ViT architecture in defence and security applications, where precise identification of aerial objects is paramount.

To further understand the model’s performance, the researchers analyzed the raw self-attention maps generated by the ViT. This analysis provided insights into how the model focuses on different parts of the spectrograms, highlighting the areas that contribute most to accurate classification. Such interpretability is crucial for building trust in machine learning models, especially in high-stakes applications like military surveillance.

The implications of this research are far-reaching. By demonstrating the efficacy of the ViT architecture in classifying micro-Doppler spectrograms, Czuba’s work paves the way for more advanced and reliable surveillance systems. The ability to accurately distinguish between different types of aerial vehicles can enhance situational awareness, improve threat detection, and support decision-making in defence operations.

Moreover, the success of the ViT model in this context suggests that transformer-based architectures could be applied to a broader range of computer vision tasks in the defence sector. As researchers continue to explore and refine these models, we can expect further advancements in the accuracy and efficiency of surveillance technologies.

In conclusion, Arkadiusz Czuba’s research represents a significant step forward in the application of machine learning to defence and security. By leveraging the Vision Transformer architecture and advanced preprocessing techniques, the study achieves unprecedented accuracy in classifying micro-Doppler spectrograms. This work not only highlights the potential of transformers in computer vision but also sets a new standard for the development of cutting-edge surveillance systems. Read the original research paper here.