Sinan Arda, a researcher at the intersection of technology policy and geopolitics, has introduced a comprehensive taxonomy for assessing the (geo)political risks posed by artificial intelligence. His work, titled *Taxonomy to Regulation: A (Geo)Political Taxonomy for AI Risks and Regulatory Measures in the EU AI Act*, provides a structured framework for understanding the multifaceted threats AI presents, particularly in the context of European regulatory efforts.

Arda’s taxonomy categorizes AI risks into four distinct groups: Geopolitical Pressures, Malicious Usage, Environmental, Social, and Ethical Risks, and Privacy and Trust Violations. Each category encompasses specific threats, such as AI-driven disinformation campaigns, the weaponization of AI by state and non-state actors, and the erosion of privacy through unregulated data collection. By systematically mapping these risks, the research offers policymakers and security analysts a clearer picture of the challenges AI introduces to global stability and national security.

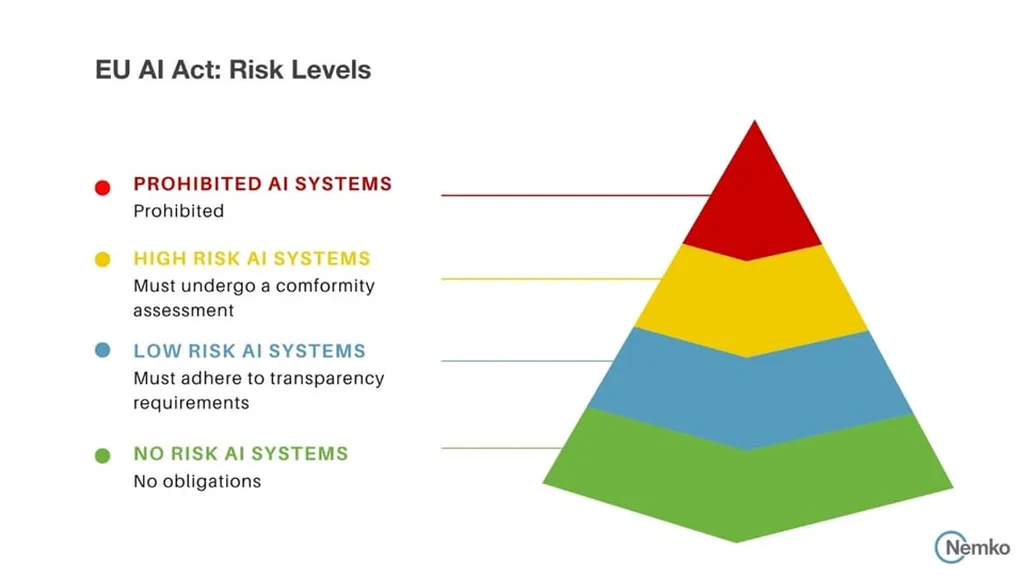

The study also evaluates the European Union’s AI Act, adopted in March 2023, as a regulatory response to these risks. While the Act is a landmark piece of legislation aimed at mitigating AI-related harms, Arda’s analysis reveals several gaps. For instance, the Act’s exclusion of systems designed exclusively for military purposes from its obligations creates a significant loophole, particularly in an era where AI is increasingly integrated into defence technologies. Additionally, the Act’s classification of General Purpose AI (GPAI) models as systemic risks based on excessively high parameters may fail to capture emerging threats from smaller, more agile AI systems.

Arda argues that the EU AI Act, while a step in the right direction, requires adjustments to address these shortcomings. He suggests tightening regulations around open-source AI models, which can be exploited by malicious actors, and revisiting the thresholds for identifying systemic risks to ensure comprehensive coverage. The study underscores the need for continuous regulatory evolution to keep pace with AI’s rapid advancements and the evolving tactics of those who might misuse it.

Beyond regulatory improvements, the research highlights the importance of international cooperation in managing AI risks. Given the global nature of AI development and deployment, Arda emphasizes that unilateral regulations may prove insufficient. Instead, a coordinated approach among like-minded nations could help establish a more robust framework for mitigating AI-related threats.

Arda’s work serves as a timely reminder that AI’s potential for both good and harm demands proactive governance. As AI continues to permeate every sector, from defence to healthcare, the need for a nuanced, forward-looking regulatory strategy becomes ever more critical. By providing a clear taxonomy of risks and a critical assessment of existing regulations, this research contributes to the ongoing debate on how best to harness AI’s benefits while minimising its dangers. Read the original research paper here.